Two-thirds of organizations are stuck in the pilot phase with AI. They run experiments, they test use cases, they see promising results—then nothing scales.

McKinsey’s latest State of AI report (November 2025) reveals the pattern: 90% of organizations regularly use AI, but only 39% report enterprise-level EBIT impact. The gap between adoption and value isn’t a mystery. It’s a choice.

What the data shows

The survey of organizations reveals three distinct clusters:

Most organizations (nearly two-thirds): still experimenting or piloting, chasing efficiency gains.

High performers: setting growth and innovation as objectives alongside efficiency, redesigning workflows, and transforming business models.

The gap: 62% now experiment with AI agents, but few have moved beyond testing to production systems that reshape how work flows.

Not efficiency, but expansion

Here’s the contrast: 80% of companies set efficiency as their AI objective. Cut costs, automate tasks, compress timelines. That’s table stakes.

(Source: McKinsey State of AI Report)

(Source: McKinsey State of AI Report)

High performers add a second intent: growth. They ask what new capabilities AI unlocks, what products become possible, and what customer experiences were previously out of reach.

Efficiency optimizes what exists. Growth expands what’s possible.

The organizations seeing material value aren’t just automating workflows—they’re redesigning them. They’re asking: if this task takes 10% of the time, what does the team do with the other 90%? If we remove this coordination bottleneck, what new bets become viable?

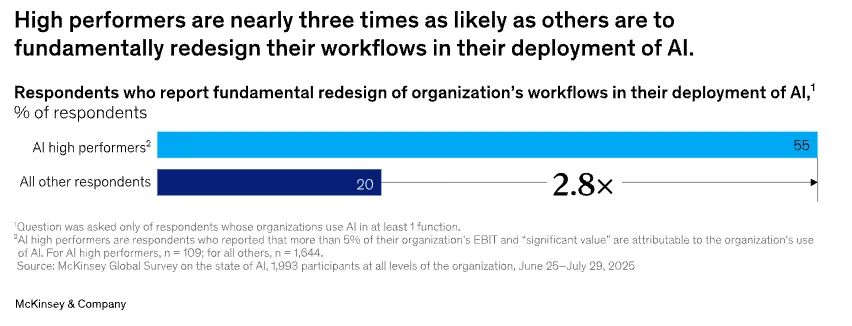

The workflow redesign test

Most AI deployments layer intelligence on top of existing processes. A copilot here, a classification model there, a summarization tool for that document type.

High performers reverse the question: If we assume AI can handle these tasks, what would the workflow look like from scratch?

That’s not augmentation. That’s transformation.

Example pattern: Radiology departments don’t just use AI to flag potential findings—they redesign the entire diagnostic workflow, routing routine reads through automated triage while radiologists focus on complex cases, with AI pre-assembling patient history and relevant priors.

Another: Prior authorization teams don’t just speed up approval requests—they restructure coverage determination so standard cases auto-approve based on clinical guidelines while utilization management focuses on edge cases, care optimization, and high-cost interventions.

The workflow redesign loop:

- Identify the bottleneck or high-volume task

- Prototype AI-native version (not AI-assisted)

- Map new human role (expanded scope, not old scope faster)

- Test enterprise impact (EBIT, not use-case ROI)

- Scale, if impact confirms

The agent signal

The 62% experimenting with AI agents isn’t just about technology maturity. It’s a signal that organizations are starting to think about automation differently.

Agents don’t just complete tasks. They coordinate across systems, make sequential decisions, and route work based on context. That’s infrastructure, not tooling.

The question isn’t whether to experiment with agents. It’s whether your organization is ready to redesign workflows to take advantage of what agents enable: continuity of context, asynchronous coordination, and decision automation.

Implications for product and engineering leaders

If you’re building AI features, here’s the fork in the road:

Path one: Add AI capabilities that make existing workflows 20% faster. Ship incremental value. Stay in the pilot phase with everyone else.

Path two: Identify one workflow that could expand your team’s leverage by 3-5x if redesigned. Prototype the AI-native version. Measure enterprise impact, not feature adoption.

The data suggests most organizations will stay on path one. High performers are choosing path two.

Where to start

Pick one workflow where your team spends significant time but sees marginal strategic impact. Map the current state. Then ask: if coordination and routine decisions were automated, what would this process look like?

Don’t optimize the workflow. Redesign it.

The gap between pilot and enterprise value isn’t about better models or more compute. It’s about asking better questions.